Today’s DevOps organizations are faced with maintaining on-demand access to high-volume cloud services.

To work as needed, these systems must constantly draw on extremely large datasets, at incredible scale, with tremendous speed and ever increasing efficiency.

The sheer size of the data, number of events, and correlated systems require that data is stored aby the petabyte. With such large amounts of data, the only way to keep your competitive advantage is through automation.

Automated Database & Application Development: Crawl, Walk, Run

While automated application updates have been common for years, automated database updates are another story. Any production engineer knows that if you make a change to the schema of your database, there will likely be negative consequences when you update that schema in production.

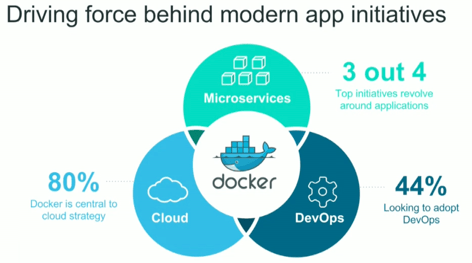

More and more companies are adopting a DevOps approach in the hopes of moving their operations and development teams closer together, while automating their core application infrastructure and build processes. In similar situations, many database administrators (DBAs) today are facing challenges with respect to source control, automated continuous delivery, and containers.

In this article, we’ll dive into DevOps adoption, agile build architecture, and automated database as well as the best practices required to successfully see those things through.

We will also examine the practices of two unicorns ($1B+ companies ) who have made the transition to DevOps — Uber and Github — and dig into their successes at delivering high-availability solutions at scale.

These industry titans are creating a competitive advantage in their respective operations departments — previously considered “cost centers” — by creating value for their customers by automating many of today’s common data problems.

Each company is solving their use cases differently, but both are winning on performance, network, data and product innovation.

Why the Move to DevOps Culture

In the 1990s, most software companies were shipping software via the infamous Waterfall project delivery lifecycle, which usually resulted in projects using a methodology best suited for building houses and not software. The move to Agile software development occurred because Waterfall had a number of shortcomings.

In particular, complex problems with many late changes to product requirements made Waterfall inefficient and slow. This shift towards Agile allowed for smaller more frequent releases of software and required a much smaller testing effort than traditional Waterfall-style releases. Over time, development teams would move from these long 12-18 month cycles to shorter cycles — releasing at quarterly or monthly intervals.

As you would expect, customers want features faster, they don’t want to deal with a lot of change all at once, and most of all — they demand that their software be reliable. The introduction of Agile was a big step forward in reducing the time-to-delivery for the customer. But it was only the beginning.

The true future of deployments is to deploy continuously and move to a fully microservices architecture as envisioned by Martin Fowler. Specifically, we need to move to full end-to-end ownerships, which will support developers in becoming better at running production environment.

The result of this shift towards ever more continuous delivery creates a competitive advantage by giving the customer what he/she wants when he/she wants it, while enhancing product resilience and reliability — without overwhelming the user.

In other words, continuous delivery in effect delivers perpetual improvement.

Best Practices: 3 Takeaways from the Field

In today’s production environment, we need applications, code, infrastructure, and databases that have been field tested at scale and peer-reviewed. The best DevOps practices and lessons from the field inform us that there are three key takeaways to embrace to achieve success with database automation:

- Source control for database code

- Automated continuous delivery

- Containers

Let’s unpack that.

1. Source Control for Database Code

At the core of DevOps lies sound engineering principles, beginning with source control. While application developers have been using source control for years, many environments still do not impose source control on their backend operations and database developers.

To ensure data and database integrity, it’s important to require backend operations and database developers use the same level of scrutiny on their software practices — including the use of source control — as is used on the front-end.

In short, DBAs are no longer a secluded island holding the keys to applications, sustainability, and an infrastructure’s capacity to grow. They are part and parcel of the entire DevOps ecosystem and must, accordingly, be included within team workflows and duly considered at every stage of the software development lifecycle.

This is of particular importance when it comes to stored procedures, triggers, and any other logic not captured in application source code. To make this transition smoother, there are a few commercial third-party database development tools that come with the ability to check in your stored procedures, triggers, and other database logic.

The ability to take advantage of source control without the overhead of switching toolsets makes these third-party solutions quite attractive.

2. Automated Continuous Delivery

One of the most strategic advantages of adopting DevOps principles is related to the build pipeline. Continuous delivery (CD) is an approach to software development that relies on automatically building, testing, and preparing your company’s code changes for frequent release. This automated process results in a software delivery that is more efficient and less dependent on human intervention.

With the entire build life cycle automated, companies can save thousands of person-hours per month or more while increasing their developer productivity and job satisfaction. CD lets you more easily perform additional testing on your code because the entire process is automated.

As leading author of the Phoenix Project and DevOps guru Gene Kim will attest, companies that embrace DevOps (CD included) enjoy considerable advantages over the rest of the technology sector. Advantages including:

- 24x better at Mean Time to Respond (MTTR)

- 200x more frequent deployments

- 3x lower change failure rate

GitHub is a great example of a company that uses best-in-breed DevOps practices to support their 24M developers and 117K businesses. As Shlomi Noach explained in his MySQL Infrastructure Testing Automation @ GitHub presentation, GitHub expects their MySQL production databases to be able to handle <30 second failovers during an unplanned failure. (Scheduled failovers take only a few seconds.)

This would not be possible without Continuous Delivery. CD benefits include increased developer productivity. For example, by configuring newly developed features to be deployed as soon as the code has been checked into source control, it ensures that those features will be made immediately available to users.

GitHub’s continuous feedback loop dramatically increases developer productivity throughout the entire organization.

3. Containers!

There are many recommendations in the field and in the media to use containers, but our second unicorn, Uber made waves for breaking the application, the database, and the monitoring instrumentation into separate containers.

For Uber, there were several advantages to embracing containers. Advantages such as making their MySQL database updates seamless and baking most of the configuration data into the container image — thus making it immutable.

The reason Uber went all-in on containers was that the technology met three key requirements for their MySQL strategy:

- Able to run multiple database processes on each host

- Automate everything

- Have a single entry point to manage and monitor all clusters across all data centers

Known as Schemadock, Uber’s solution means they run MySQL in Docker containers with “goal states” that define their database cluster topology in configuration files. Each container also includes an agent that then applies those topologies to each database.

The key to making this work was creating a centralized service that could both maintain and monitor the “goal states” for each instance while ensuring there would be no configuration or database drift.

Uber level commitment to containers, though, requires a serious technological investment and cultural change. As Uber mentions in their engineering blog, if an organization chooses to implement containers, they must develop core competencies in the following areas:

- Image building and distribution

- Monitoring of containers

- Upgrading experts in Docker or container orchestration

- Log collection

- Networking

With the Schemadock strategy in place, Uber simply creates a new gold image any time they want to make a configuration, application, or database change. Organizations using this strategy can protect against configuration drift — without the need for Puppet or Ansible — by baking most of their configuration into the Docker Image and then ensuring that the version is actively monitored.

For example, when a new version of MySQL is available, the deployment process is simple. First, build the new gold image, then operations shut down containers in an orchestrated fashion, allow them to be destroyed, and then created with new MySQL database builds and updated minions and masters.

With these strategies in place, Uber is no longer dependant on Puppet for database updates. As Uber describes it, the objective is to use agents running on each host container that simply have a “goal state” file that is stored locally and starts a process — much like Puppet used to — every 30 seconds and deploys the latest configuration.

The Agent Process looks like this:

- Is the container running? If not, create one and start it.

- Check replication topology: master or minion.

- Check various mySQL parameters read_only super_read_only syn_big.

- Masters are writable and minions are read-only.

- On minions, for performance related reasons, we turn off binlog fsync.

Uber provides a great use case for containers in production and their roadmap is certainly something DevOps leaders should closely examine to see if containers would work in their organizations.

Tying it All Together

If 2017 taught us anything about succeeding with automated database, it’s that, focused on delivering immediate customer value to every part of their businesses, organizations are increasingly committing themselves to DevOps principles.

Companies like GitHub, and Uber are already reaping the rewards of a developer and customer-first approach.

By leading with implementing source control, continuous deployment, and a strategy for automating database updates, both of those unicorns are optimizing their development teams’ ability to more quickly deploy feature changes, fixes, and updates almost as soon as the developer finishes coding these changes.

This improved delivery time also leads to a more stable, high-quality build from the previous releases.

Find out more on cloud orchestration here.